一、HBase协处理器简介

二、实现思路

2.1 Observer协处理器部分函数介绍(后续将使用这两个函数实现二级索引)

- postPut:该函数在 put 操作执 行后会被 Region Server 调用

- postDelete:该函数将在执行删除后被Region Server 调用

- 思路:

- 1.使用Elasticsearch作为索引库

- 2.我们可以利用postPut回调函数,在往hbase插入数据时,执行Elasticsearch插入操作

- 3.我们可以利用 postDelete回调函数,在删除hbase数据时,执行Elasticsearch删除操作

三、协处理器实现代码

- HBase协处理器(服务)代码

package xxx.xxx.hbase.coprocessor;

import xxx.xxx.es.util.ESClient;

import xxx.xxx.es.util.ElasticSearchBulkOperator;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.hbase.Cell;

import org.apache.hadoop.hbase.CellUtil;

import org.apache.hadoop.hbase.CoprocessorEnvironment;

import org.apache.hadoop.hbase.client.Delete;

import org.apache.hadoop.hbase.client.Durability;

import org.apache.hadoop.hbase.client.Put;

import org.apache.hadoop.hbase.coprocessor.ObserverContext;

import org.apache.hadoop.hbase.coprocessor.RegionCoprocessor;

import org.apache.hadoop.hbase.coprocessor.RegionCoprocessorEnvironment;

import org.apache.hadoop.hbase.coprocessor.RegionObserver;

import org.apache.hadoop.hbase.util.Bytes;

import org.apache.hadoop.hbase.wal.WALEdit;

import org.apache.log4j.Logger;

import java.io.IOException;

import java.util.*;

/**

* HBase 二级索引通用协处理器

* 装载协处理器时,添加ES索引名和type名即可

*/

public class UniversalHBase2Index implements RegionObserver , RegionCoprocessor {

private static final Logger LOG = Logger.getLogger(UniversalHBase2Index.class);

private String index;

private String type;

public Optional<RegionObserver> getRegionObserver() {

return Optional.of(this);

}

@Override

public void start(CoprocessorEnvironment env) throws IOException {

// init ES client

ESClient.initEsClient();

Configuration configuration = env.getConfiguration();

index = configuration.get("index");

type = configuration.get("type");

LOG.info("****init start*****");

}

@Override

public void stop(CoprocessorEnvironment env) throws IOException {

ESClient.closeEsClient();

// shutdown time task

ElasticSearchBulkOperator.shutdownScheduEx();

LOG.info("****end*****");

}

@Override

public void postPut(ObserverContext<RegionCoprocessorEnvironment> e, Put put, WALEdit edit, Durability durability) throws IOException {

String indexId = new String(put.getRow());

try {

NavigableMap<byte[], List<Cell>> familyMap = put.getFamilyCellMap();

Map<String, Object> infoJson = new HashMap<>();

Map<String, Object> json = new HashMap<>();

for (Map.Entry<byte[], List<Cell>> entry : familyMap.entrySet()) {

for (Cell cell : entry.getValue()) {

String key = Bytes.toString(CellUtil.cloneQualifier(cell));

String value = Bytes.toString(CellUtil.cloneValue(cell));

json.put(key, value);

}

}

// set hbase family to es

infoJson.put("fn", json);

LOG.info(json.toString());

ElasticSearchBulkOperator.addUpdateBuilderToBulk(ESClient.client.prepareUpdate(index,type, indexId).setDocAsUpsert(true).setDoc(json));

LOG.info("**** postPut success*****");

} catch (Exception ex) {

LOG.error("observer put a doc, index [ " + "user_test" + " ]" + "indexId [" + indexId + "] error : " + ex.getMessage());

}

}

@Override

public void postDelete(ObserverContext<RegionCoprocessorEnvironment> e, Delete delete, WALEdit edit, Durability durability) throws IOException {

String indexId = new String(delete.getRow());

try {

ElasticSearchBulkOperator.addDeleteBuilderToBulk(ESClient.client.prepareDelete(index,type, indexId));

LOG.info("**** postDelete success*****");

} catch (Exception ex) {

LOG.error(ex);

LOG.error("observer delete a doc, index [ " + "user_test" + " ]" + "indexId [" + indexId + "] error : " + ex.getMessage());

}

}

}

- 工具类代码

- Elasticsearch 客户端连接代码

package xxx.xxx.es.util;

import org.apache.commons.logging.Log;

import org.apache.commons.logging.LogFactory;

import org.elasticsearch.client.Client;

import org.elasticsearch.common.settings.Settings;

import org.elasticsearch.common.transport.TransportAddress;

import org.elasticsearch.transport.client.PreBuiltTransportClient;

import java.net.InetAddress;

import java.net.UnknownHostException;

/**

* ES Cleint class

*/

public class ESClient {

public static Client client;

private static final Log log = LogFactory.getLog(ESClient.class);

/**

* init ES client

*/

public static void initEsClient() throws UnknownHostException {

log.info("初始化es连接开始");

System.setProperty("es.set.netty.runtime.available.processors", "false");

Settings esSettings = Settings.builder()

.put("cluster.name", "log_cluster")//设置ES实例的名称

.put("client.transport.sniff", true)

.build();

//填写自己公司ES集群地址即可

client = new PreBuiltTransportClient(esSettings)

.addTransportAddress(new TransportAddress(InetAddress.getByName("10.248.xxx.01"), 9300))

.addTransportAddress(new TransportAddress(InetAddress.getByName("10.248.xxx.02"), 9300))

.addTransportAddress(new TransportAddress(InetAddress.getByName("10.248.xxx.02"), 9300));

log.info("初始化es连接完成");

}

/**

* Close ES client

*/

public static void closeEsClient() {

client.close();

log.info("es连接关闭");

}

}

- Elasticsearch 客户端操作工具(插入或删除)

package com.xxx.es.util;

import org.apache.commons.logging.Log;

import org.apache.commons.logging.LogFactory;

import org.elasticsearch.action.bulk.BulkRequestBuilder;

import org.elasticsearch.action.bulk.BulkResponse;

import org.elasticsearch.action.delete.DeleteRequestBuilder;

import org.elasticsearch.action.support.WriteRequest;

import org.elasticsearch.action.update.UpdateRequestBuilder;

import java.net.UnknownHostException;

import java.util.concurrent.Executors;

import java.util.concurrent.ScheduledExecutorService;

import java.util.concurrent.TimeUnit;

import java.util.concurrent.locks.Lock;

import java.util.concurrent.locks.ReentrantLock;

public class ElasticSearchBulkOperator {

private static final Log LOG = LogFactory.getLog(ElasticSearchBulkOperator.class);

private static final int MAX_BULK_COUNT = 10000;

private static BulkRequestBuilder bulkRequestBuilder = null;

private static final Lock commitLock = new ReentrantLock();

private static ScheduledExecutorService scheduledExecutorService = null;

static {

// 初始化 bulkRequestBuilder

bulkRequestBuilder = ESClient.client.prepareBulk();

bulkRequestBuilder.setRefreshPolicy(WriteRequest.RefreshPolicy.IMMEDIATE);

// 初始化线程池大小为1

scheduledExecutorService = Executors.newScheduledThreadPool(1);

//创建一个Runnable对象,提交待写入的数据,并使用commitLock锁保证线程安全

final Runnable beeper = () -> {

commitLock.lock();

try {

LOG.info("Before submission bulkRequest size : " +bulkRequestBuilder.numberOfActions());

//提交数据至es

bulkRequest(0);

LOG.info("After submission bulkRequest size : " +bulkRequestBuilder.numberOfActions());

} catch (Exception ex) {

System.out.println(ex.getMessage());

} finally {

commitLock.unlock();

}

};

//初始化延迟10s执行 runnable方法,后期每隔30s执行一次

scheduledExecutorService.scheduleAtFixedRate(beeper, 10, 30, TimeUnit.SECONDS);

}

public static void shutdownScheduEx() {

if (null != scheduledExecutorService && !scheduledExecutorService.isShutdown()) {

scheduledExecutorService.shutdown();

}

}

private static void bulkRequest(int threshold) {

if (bulkRequestBuilder.numberOfActions() > threshold) {

BulkResponse bulkItemResponse = bulkRequestBuilder.execute().actionGet();

if (!bulkItemResponse.hasFailures()) {

bulkRequestBuilder = ESClient.client.prepareBulk();

}

}

}

/**

* add update builder to bulk

* use commitLock to protected bulk as thread-save

* @param builder

*/

public static void addUpdateBuilderToBulk(UpdateRequestBuilder builder) {

commitLock.lock();

try {

bulkRequestBuilder.add(builder);

bulkRequest(MAX_BULK_COUNT);

} catch (Exception ex) {

LOG.error(" update Bulk " + "gejx_test" + " index error : " + ex.getMessage());

try {

ESClient.initEsClient(); //初始化连接

Thread.sleep(500);

} catch (Exception e) {

e.printStackTrace();

}

} finally {

commitLock.unlock();

}

}

/**

* add delete builder to bulk

* use commitLock to protected bulk as thread-save

*

* @param builder

*/

public static void addDeleteBuilderToBulk(DeleteRequestBuilder builder) {

commitLock.lock();

try {

bulkRequestBuilder.add(builder);

bulkRequest(MAX_BULK_COUNT);

} catch (Exception ex) {

LOG.error(" delete Bulk " + "gejx_test" + " index error : " + ex.getMessage());

} finally {

commitLock.unlock();

}

}

}

- pom文件

<properties>

<hadoop.version>3.0.0</hadoop.version>

<hbase.version>2.0.0</hbase.version>

<elasticsearch.version>6.3.0</elasticsearch.version>

<hutool.version>5.3.10</hutool.version>

</properties>

<dependencies>

<dependency>

<groupId>org.apache.hbase</groupId>

<artifactId>hbase-server</artifactId>

<version>2.0.6</version>

<exclusions>

<exclusion>

<artifactId>jdk.tools</artifactId>

<groupId>jdk.tools</groupId>

</exclusion>

<exclusion>

<groupId>io.netty</groupId>

<artifactId>netty-all</artifactId>

</exclusion>

</exclusions>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-mapreduce-client-core</artifactId>

<version>${hadoop.version}</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-common</artifactId>

<version>${hadoop.version}</version>

<exclusions>

<exclusion>

<groupId>org.apache.htrace</groupId>

<artifactId>htrace-core</artifactId>

</exclusion>

</exclusions>

<scope>provided</scope>

</dependency>

<dependency>

<groupId>org.elasticsearch</groupId>

<artifactId>elasticsearch</artifactId>

<version>${elasticsearch.version}</version>

</dependency>

<dependency>

<groupId>org.elasticsearch.client</groupId>

<artifactId>transport</artifactId>

<version>${elasticsearch.version}</version>

</dependency>

<dependency>

<groupId>cn.hutool</groupId>

<artifactId>hutool-all</artifactId>

<version>${hutool.version}</version>

</dependency>

</dependencies>

四、Elasticsearch建立索引表

#创建索引,并指定副本数和分片数

PUT user_test

{

"settings": {

"number_of_replicas": 1

, "number_of_shards": 5

}

}

#确定表索引结构

PUT user_test/_mapping/user_test_type

{

"user_test_type":{

"properties":{

"name":{"type":"text"},

"city":{"type":"text"},

"province":{"type":"text"},

"interest":{"type":"text"},

"followers_count":{"type":"long"},

"friends_count":{"type":"long"},

"statuses_count":{"type":"long"}

}

}

}

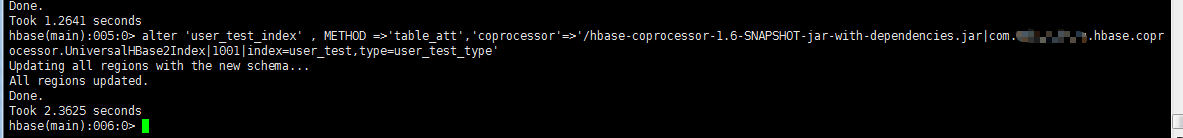

五、协处理安装和卸载

- 准备工作:将协处理器代码打成jar包,上传至HDFS目录下,本例子是上传到"/" 目录下

- 协处理安装

disable 'user_test_index' #禁用hbase表

alter 'user_test_index' , METHOD =>'table_att','coprocessor'=>'/hbase-coprocessor-1.6-SNAPSHOT-jar-with-dependencies.jar|com.xxx.hbase.coprocessor.UniversalHBase2Index|1001|index=user_test,type=user_test_type'

enable 'user_test_index' #启用表

desc 'user_test_index' #查看协处理器是否安装成功,这里仅仅是查看安装状态,真正是否生效还需要查看put数据时,ES是否收到数据

#参数说明

#user_test:hbase表表名

#hbase-coprocessor-1.6-SNAPSHOT-jar-with-dependencies.jar:协处理器jar包

#com.xxx.hbase.coprocessor.UniversalHBase2Index:协处理器全类名

#index:填入Elasticsearch index表

#type:填入index表对应Type

#注意:修改协处理器代码后,要使新的协处理生效需要先卸载表原有协处理器,然后将jar包打包下来修改名字(重点)上传至hdfs系统,后重新安装,安装时使用新的jar包名。

#为什么要修改jar包名:因为安装完协处理器后,RegionSever已经将jar包加载到JVM中去了,如果不修改名称,重新装载还是会找到原来的jar,并不会替换。

- 协处理器卸载

disable 'user_test_index' #禁用表

alter 'user_test_index', METHOD => 'table_att_unset', NAME => 'coprocessor$1'

enable 'user_test_index'

desc 'user_test_index'

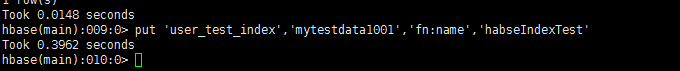

五、流程测试与问题留言

- 上传协处理器jar包到hdfs

- 安装协处理器

disable等操作就不演示了

- 测试插入数据

插入数据前一定要在Elasticsearch建立好对应的索引表(按照步骤四进行即可)

- Elasticsearch查询数据是否入库

发现数据已经成功入库 - 问题与留言

本文实现了HBase建立二级索引的通用协处理器,对某张表安装协处理器时,只需要指定其对应的index名与type名,不需要多次开发。

有问题的小伙伴可以留言,欢迎交流与探讨!

参考文章:

577

577

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?